– □ x

OUR MISSION OF

RADICAL TRANSPARENCY

HOW WE SCORE

Everything we do is about stopping fake reviews and putting the power back into the hands of the consumer. Below is how we use scoring to do that.

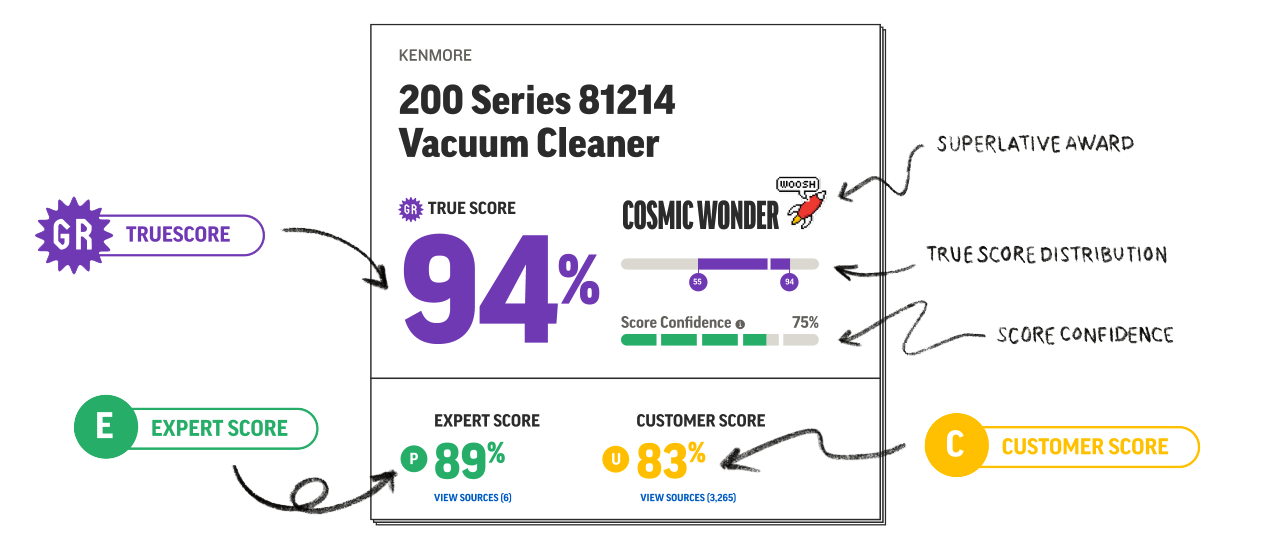

TRUE SCORE

True Score

0-100 logarithmic scale, leading indicator of quality, utility and value of product; standardized based on weighted Expert Score and Customer Score.

The 0-100 True Score is calculated by weighting the customer ratings and expert test scores in the following manner: (need statistical backing and relevance)

0-100 logarithmic scale, leading indicator of quality, utility and value of product; standardized based on weighted Expert Score and Customer Score.

The 0-100 True Score is calculated by weighting the customer ratings and expert test scores in the following manner: (need statistical backing and relevance)

The Concept

TrueScore Distribution is an at-a-glance way to know where any product you’re looking at lies compared to other similar products. A laptop with a TrueScore of 88% is a good laptop.

But when you look at the TrueScore Distribution you see that the scores range from 65 to 89. That means the worst laptop hit 65 TS, but the best hit 89 TS.

verification

TrueScores don’t just pop into existence without any backing. Beyond the use of Expert Scores and Customer Scores (more on that later) they also get compared to established product testers with over a decade in the game. Brands like Consumer Reports, Which.co.uk, and Choice.com.au are held up to our own TrueScore to help us make important decisions.

score confidence

TrueScore Distribution is an at-a-glance way to know where any product you’re looking at lies compared to other similar products.

A laptop with a TrueScore of 88% is a good laptop. But when you look at the TrueScore Distribution you see that the scores range from 65 to 89. That means the worst laptop hit 65% TS, but the best hit 89% TS.

truescore distribution

TrueScore Distribution is an at-a-glance way to know where any product you’re looking at lies compared to other similar products.

A laptop with a TrueScore of 88% is a good laptop. But when you look at the TrueScore Distribution you see that the scores range from 65 to 89. That means the worst laptop hit 65% TS, but the best hit 89% TS.

+ READ MORE

Calculating the Score Confidence

- [(TrustScore1 + TrustScore2 + . . . + TrustScore) / (n x 100) ] = Percent Average Trust Score

- CONMOD (Confidence Modifier) = (1 – n) [where n ≤ 5, n = (5 – Total Sources) x 0.05]

- [(PATS) x (CONMOD)] x 100 = Score Confidence

- Example

- (87 + 94 + 74 + 66) / (4) = 80.25 (PATS) and 4 Sources Used

- [(PATS) x (CONMOD)] x 100 = Score Confidence

- →[(0.8025) x (1 – [(5- 4) x 0.05] )] x 100

- → [(0.8025 x (1 – 0.05))] x 100

- → [(0.8025 x 0.95)] x 100

- If this product had enough sources, it would have scored 80%.

- →0.762375 x 100

- →76% Score Confidence

- →0.762375 x 100

- If this product had enough sources, it would have scored 80%.

E

Expert Score

Accounts for

70% of the TrueScore.

Oh, well, sometimes I’m absolutely sure, like about Daffodil,” said the boy; “and sometimes I have an idea; and sometimes I haven’t even an idea, have I, Bassett?

Expert Scores are where we break out the magnifying glasses. We are here to test the testers and assure you that you’re getting trusted information from trustworthy experts.

For that, we’ve got Expert Scores, or ES, which indicates the actual performance of a product in competitive, real world tests. We check what Experts focus on to make sure they’re really doing the legwork. Using this info, we build everything from the bottom up, going from granular to overarching, and then present it to you from the top down so it’s easy to digest.

performance CRITERIA

Performance criteria are finer qualities that live beneath a larger “umbrella”. Things like brightness, contrast ratio and color accuracy on a television could be considered performance criteria. Most of the criteria are also quantitative, meaning you won’t see some wishy-washy explanation. Brightness is measurable, and that’s how it’s reported and collected.

categories of performance

Categories of performance are the “umbrella” that covers the performance criteria we just talked about. The Experts should know (just like we know) what categories are applicable to and responsible for a quality product. From TVs to toasters, there’s always a set of qualities and metrics to be on the lookout for. We’re on the lookout for them. Google’s on the lookout for them.

the reason

So, why is this the way we approach things? We want to identify all of the key decision points on any product. By doing that, we can collect all of these data points and then present them to you to help you make an informed decision. These aren’t just a few data points either – our lists are enormous, and are filled to the brim with synonyms to help link together performance criteria.

publication trust core

We’ve developed a process that assesses the trust of an expert site, which is known as the Publication Trust Score. The Publication Trust Score is the average of the scores given to each of the expert site’s product review categories, which are based on how trustworthy and in-depth the categories’ content is. These individual scores are called Category Trust Scores.

C

Customer Score

Accounts for

40% of the TrueScore.

Oh, well, sometimes I’m absolutely sure, like about Daffodil,” said the boy; “and sometimes I have an idea; and sometimes I haven’t even an idea, have I, Bassett?

You can’t ignore the voice of the people. Customers are huge when it comes to understanding how good a product really is. But in today’s world of fake reviews, customer ratings aren’t as safe to rely on as they should be.

And here’s exactly how we made them worth trusting again. Too many reviews on websites are naked ratings. All stars, no substance. These “one tap” reviews are easy to fudge. And even if a product isn’t faking it, many of these reviews are born out of apathy. Think of how easy it is to tap something on your phone.

ONLY VERIFIED REVIEWS

Performance criteria are finer qualities that live beneath a larger “umbrella”. Things like brightness, contrast ratio and color accuracy on a television could be considered performance criteria. Most of the criteria are also quantitative, meaning you won’t see some wishy-washy explanation. Brightness is measurable, and that’s how it’s reported and collected.

no one-click reviews

Categories of performance are the “umbrella” that covers the performance criteria we just talked about. The Experts should know (just like we know) what categories are applicable to and responsible for a quality product. From TVs to toasters, there’s always a set of qualities and metrics to be on the lookout for. We’re on the lookout for them. Google’s on the lookout for them.

A Simple Breakdown

Ratings from Experts

The Publication gives the product their own score.

T

Trust Score

Gadget Review gives every publication a Trust Score which is then used to give a confidence rating of the publications own score.

Large E-comm

Something about verified reviews only.

Niche E-comm

Something about no 1-click ratings.

E

Expert Score

An Expert Score is derived from the score given by a publication and it accounts for 60% of the True Score.

C

Customer Score

A Customer Score is taken from a customer review and it accounts for 40% of the True Score.

True Score

The only score you need! Made up of the Expert Score and the Customer Score.

Our Scores

There are currently 8 rating levels based on integers of 5 that represent various levels of “quality” of the BlendedScore. A sentiment and description is provided and we need statistical verification for the number of integers/levels.

cosmic wonder

90%-100% excellent

- 1% of products. Multiple product test winner. Out performs all the competition in expert score by at least 5%

- 80% of the customers rate it 4.5%

- More copy needed as it’s not been completed here

- Lost is the man that chases after the dog with no leash and the dog that has no home

absolutely fresh

80%-90% very good

- 1% of products. Multiple product test winner. Out performs all the competition in expert score by at least 5%

- 80% of the customers rate it 4.5%

- More copy needed as it’s not been completed here

- Lost is the man that chases after the dog with no leash and the dog that has no home

mixed reviews

70%-80% good

- 1% of products. Multiple product test winner. Out performs all the competition in expert score by at least 5%

- 80% of the customers rate it 4.5%

- More copy needed as it’s not been completed here

- Lost is the man that chases after the dog with no leash and the dog that has no home

cosmic wonder

60%-70% poor

- 1% of products. Multiple product test winner. Out performs all the competition in expert score by at least 5%

- 80% of the customers rate it 4.5%

- More copy needed as it’s not been completed here

- Lost is the man that chases after the dog with no leash and the dog that has no home

absolutely fresh

BELOW 60% VERY POOR

- 1% of products. Multiple product test winner. Out performs all the competition in expert score by at least 5%

- 80% of the customers rate it 4.5%

- More copy needed as it’s not been completed here

- Lost is the man that chases after the dog with no leash and the dog that has no home

Awards!

Customer favorite

Products that earned more than 4.5 stars out of 5 across more than 100 reviews on at least two e-commerce sites

expert pick

Awarded for products that place in the top 5 of at least 3 Expert’s lists; doesn’t apply if the list is shorter than 10 products.

budget friendly

Products with at least 60% TrueScore that are less expensive than 80% of products.

TEST WINNER

Product that measures highest in the most categories of performance.

gr certified legit

Products with at least an 80% TrueScore.

best value

Products with at least 80% TrueScore that are also less than 80% of the products in the category.